30 Days of ML

I’m taking the Stanford CS229 Machine Learning course to really understand how generative models like Stable Diffusion and DALL-E work.

I think my one of my strongest skills is synthesis - finding meaning from heaps of data and presenting it in a simple way, and I suspect that understanding how these engines work will not only be a useful career skill, but will teach me how to think better.

The course is a 20-video YouTube playlist. I found it on this github repo.

I’m also taking the Supervised Machine Learning program on Coursera. Andrew Ng, who teaches both courses, says the Coursera course (CS229A) is an applied version of the CS229.

Day 1

- Machine learning is how you teach computers to perform tasks without explicitly programming them.

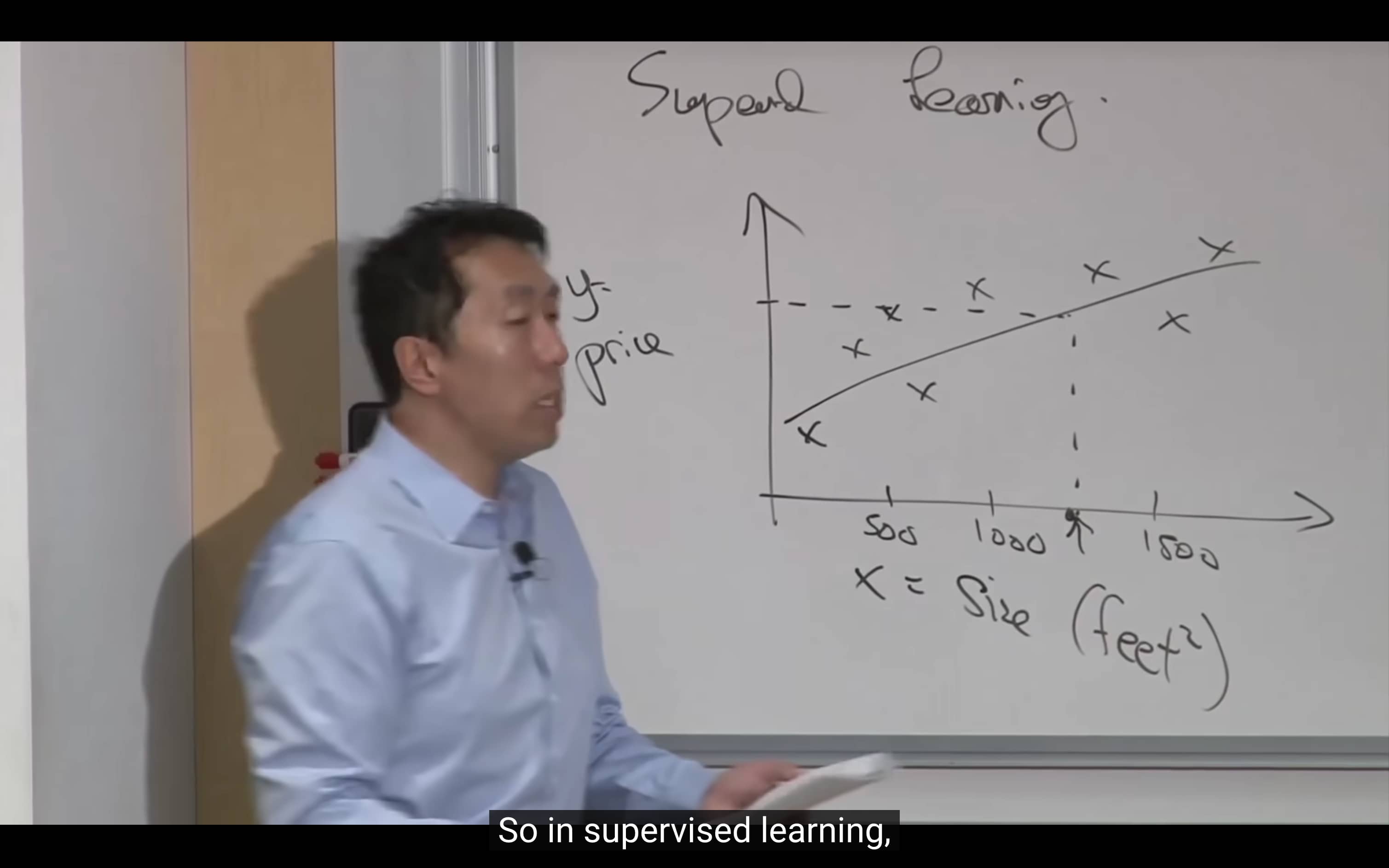

- The most common form is “Supervised Learning” where you give the computer a dataset with inputs and labels (x,y). By observing the inputs and labels, the computer can figure out a mapping to predict y given x.

- On the other hand, in “Unsupervised Learning”, the computer is given a dataset with no labels (x) and asked to find something interesting in the dataset.

- There are different types of problems e.g. regression and classification.

- In a regression problem, the machine is trying to guess a continuous quantity e.g. given a dataset of house sizes to prices, predict the price of a house (given the size).

- In a classification problem, the machine is trying to guess a discrete outcome e.g. given a dataset of tumor sizes and malignancy, predict whether a tumor is malignant or not.

- In the Carnegie Mellon Experiment, a computer is taught to drive by taking screenshots of the the road 10x per second and matching it to a human’s actions on the steering wheel.

- The strategy you choose in ML is very important. This is known as Learning Theory.