30 Days of ML #2

This is Day 12 of my AI research (since I started counting).

About a month ago, I got Samson to help put together a primer on Image Generating AI tools.

I recently went back to read the doc and I understand it a bit more. I can’t wrap my head around diffusion models and pre-generative tranining yet, but I suspect as I continue Andrew Ng’s course, I’ll come to understand them better.

Andrew Ng also writes a blog on AI that I subscribe to. And in a recent email, he said this:

AI is technically complex, and it has its fair share of smart and highly capable people. But, of course, it is easy to forget that to become good at anything, the first step is to suck at it. If you’ve succeeded at sucking at AI – congratulations, you’re on your way!

I once struggled to understand the math behind linear regression. I was mystified when logistic regression performed strangely on my data, and it took me days to find a bug in my implementation of a basic neural network. Today, I still find many research papers challenging to read, and just yesterday I made an obvious mistake while tuning a neural network hyperparameter (that fortunately a fellow engineer caught and fixed).

Very encouraging.

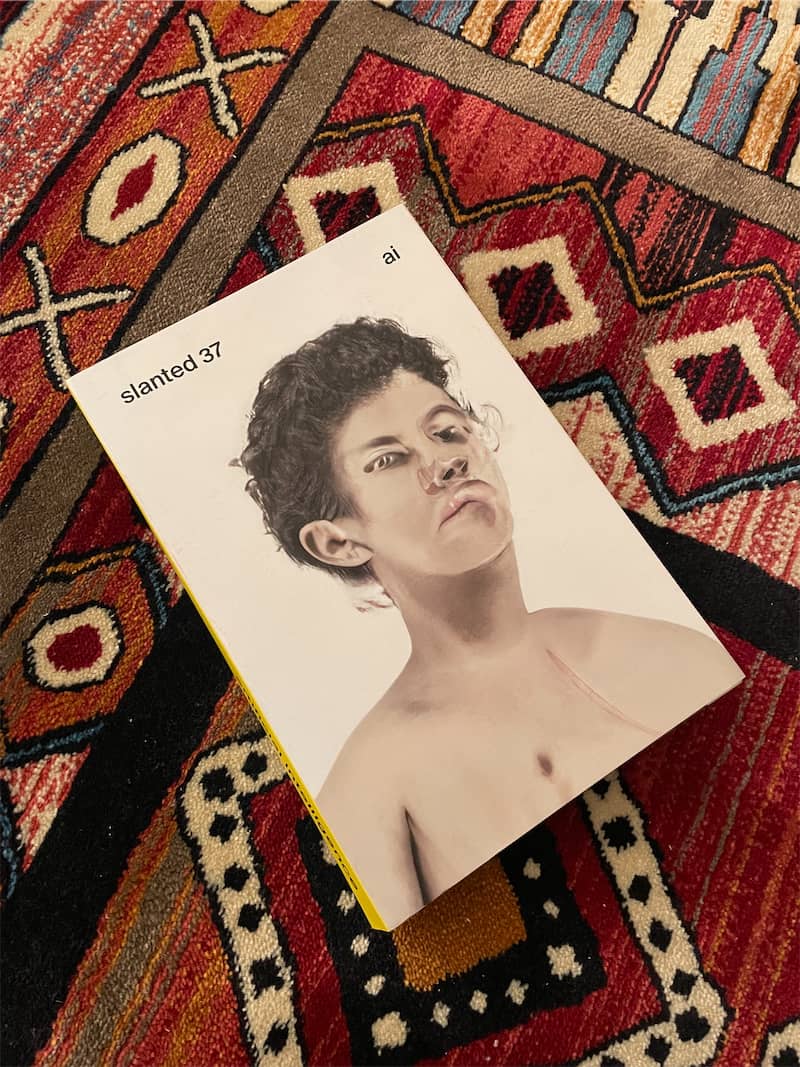

I also rediscovered slanted 37, a magazine on AI I bought at least a year ago. So timely. It feels like I’m learning on three fronts now: the Coursera program is my anchor, the YouTube course is for getting exposed to the math, and slanted introduces me to real world art, design and technology applications.

On y va!